Posted on behalf of:

Minmin Hou, Jian Zhang, Yuan Wu, Kaixuan Liu

Introduction

This document automation reference use case is an end-to-end reference solution in Intel AI reference architecture. It aims to build an AI-augmented multi-modal semantic search system for document images such as scanned documents and images. It aims to simplify and speed up document processing for enterprise users to gain deeper and more timely insights from their documents and focus on their business.

Motivation

Enterprises are accumulating a massive quantity of documents, a large portion of which are scanned documents in various image formats [1]. These documents contain a large amount of valuable information, but it is a challenge for enterprises to index and gain insights from the document images due to the following reasons:

- The end-to-end (E2E) solution involves many components that are cumbersome or challenging to integrate with each other.

- Indexing large collections of document images is very time-consuming. A distributed indexing capability is needed, but there is no end-to-end open-source solution that is ready to use.

- Queries against document image collections with natural language requires multi-modality AI that understands both images and languages. Building such multi-modality AI models requires deep expertise in machine learning (ML) and deep learning.

- Deploying multi-modality AI models with databases and a user interface is not easy as it requires different domain expertise and skills.

- Many multi-modality AI models can only comprehend English, and developing non-English models takes time and requires ML experience.

To help enterprises focus on business outcomes instead of spending precious engineering resources on developing a document automation solution from scratch, we have created an open-source AI-based document automation reference solution.

Developers can take the containerized reference solution and adapt it to their data by configuring settings while following the detailed step-by-step instructions in the Readme. The AI-based solution can help automate the tedious and time-consuming jobs of extracting, analyzing, and indexing the documents while enabling fast search through a natural language interface. The benefits of our reference use case include the following:

- Improved developer productivity: The three pipelines in this reference use case are all containerized and allow customization through either command line arguments or config files. Developers can bring their own data and jump-start development and customization very easily.

- Excellent retrieval recall & mean reciprocal rank (MRR) on the benchmark dataset: We demonstrated that the AI-augmented ensemble retrieval method achieved higher recall and MRR than the non-AI retrieval method than the results reported in this paper on Dureader-vis, the largest open-source document visual retrieval dataset (158k raw images in total).

- Better performance: Distributed image-to-document indexing capability accelerates the indexing process to shorten the development time.

- Easy to deploy: Using two Docker containers from Intel's open domain question answering workflow and two other open-source containers, one can easily deploy the retrieval solution by customizing the config files and running the launch script provided in this reference use case.

- Multilingual customizable models: You can use our pipelines to develop and deploy your models in many languages.

Overview of the Document Automation Reference Use Case

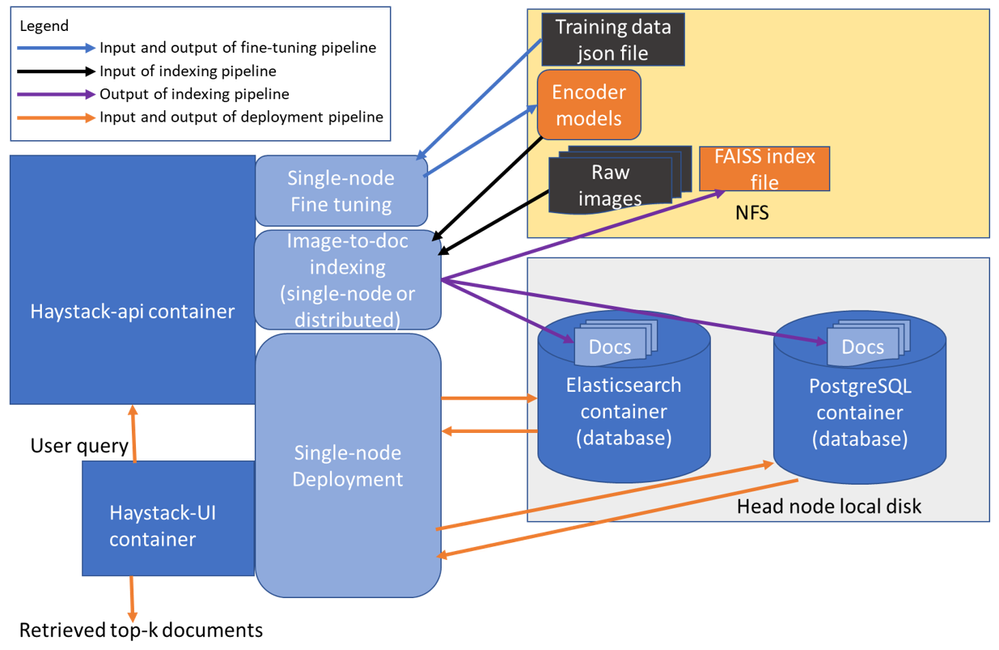

In this reference use case, we implement and demonstrate a complete end-to-end solution that helps enterprises tackle these challenges and jump-start the customization of the reference solution for their own document archives. The architecture of the reference use case is shown in Figure 1 and includes these three pipelines:

- Single-node Dense Passage Retriever (DPR) fine-tuning pipeline

- Image-to-document indexing pipeline (can be run on either a single node or distributed on multiple nodes)

- Single-node deployment pipeline

Figure 1: Architecture for document automation

Dense Passage Retriever (DPR) Fine Tuning

Dense passage retriever is a dual-encoder retriever based on transformers; more information can be found in the DPR paper [2] with an in-depth description of DPR model architecture and the fine-tuning algorithms.

In this reference use case, we used a pretrained cross-lingual language model available on the Hugging Face model hub, namely, the infoxlm-base [3] model pretrained by Microsoft, as the starting point for both the query encoder and document encoder. We fine-tuned the encoders with in-batch negatives.

We demonstrated that ensembling our fine-tuned DPR with BM25 retriever [4] (a type of widely used non-AI retriever) can improve the retrieval recall and MRR compared to BM25 only.

Image-to-Document Indexing

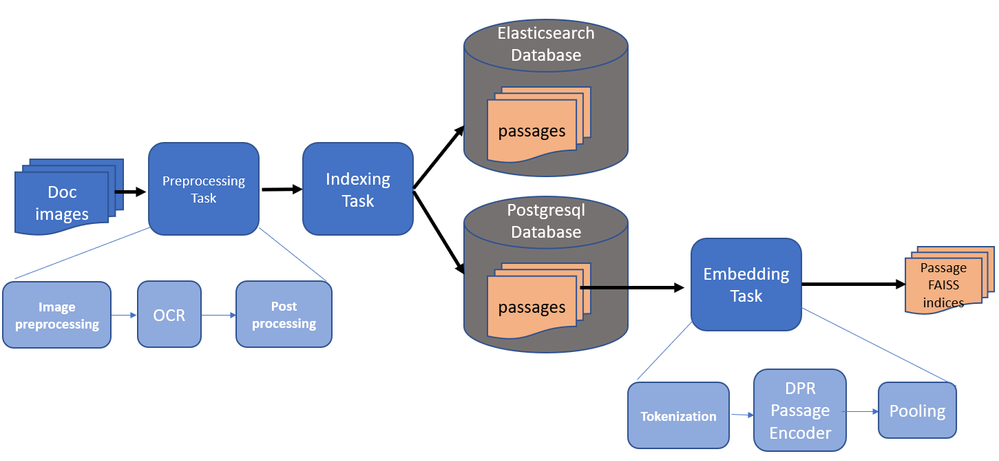

To retrieve documents in response to queries, we first need to index the documents where the raw document images are converted into text passages and stored in databases with indices. The architecture of the indexing pipeline is shown in Figure 2. There are three tasks in the indexing pipeline:

- Preprocessing task: this task consists of 3 steps - image preprocessing, text extraction with OCR (optical character recognition), post-processing of OCR outputs. This task converts images into text passages.

- Indexing task: This task places text passages produced by the preprocessing task into one or two databases depending on the user-specified retrieval method.

- Embedding task: This task generates dense vector representations of text passages using the DPR document encoder and then generates a FAISS index file with the vectors. FAISS [5] is a similarity search engine for dense vectors. When it comes to retrieval, the query will be turned into its vector representation by the DPR query encoder, and the query vector will be used to search against the FAISS index to find the vectors of text passages with the highest similarity to the query vector. The embedding task is required for the DPR retrieval or the ensemble retrieval method but is not required for BM25 retrieval.

Figure 2: Image-to-Document indexing pipeline

Deployment

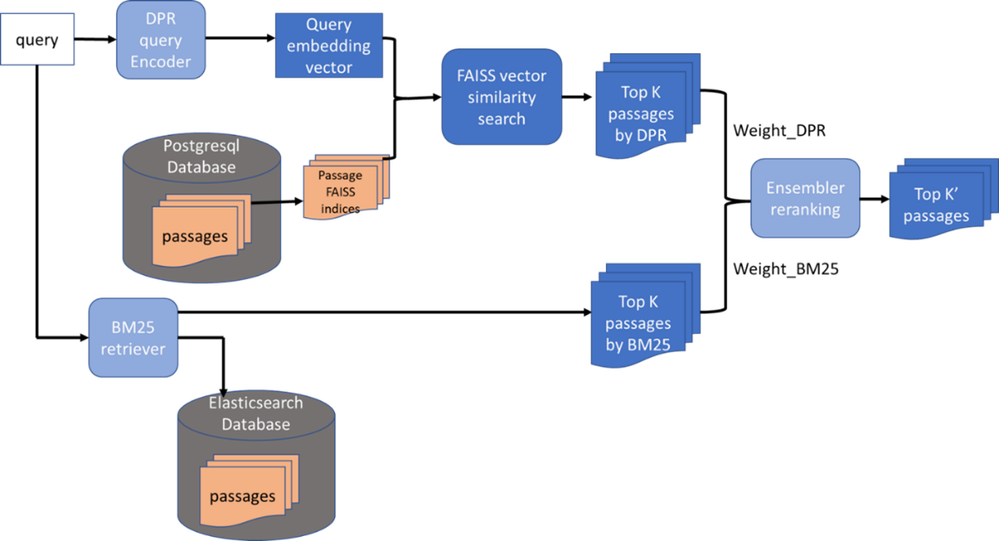

After the DPR encoders are fine-tuned, and the document images are indexed into databases as text passages, we can deploy a retrieval system on a server with Docker containers and retrieve documents in response to user queries. Once the deployment pipeline is successfully launched, users can interact with the retrieval system through a web user interface (UI) and submit queries in natural language. The retrievers will search for the most relevant text passages in the databases and return those passages to be displayed on the web UI. Figure 3 shows how BM25 and DPR retrievers retrieve top-K passages and how the ensemble re-ranks the passages with weighting factors to improve the recall and MRR of individual retrievers.

Figure 3: Deployment pipeline

Performance

Testing methodology

To measure the performance benefits of this document automation use case, we evaluated it with different retrieval methods and compared Top-5/10 recall and MRR. The test case completely indexes the entire DuReader-vis dataset (158k images total), then compares the top-N MRR and Recall performance for test set queries.

Performance

Table 1 shows that using an ensemble retrieval method (BM25 + DPR) improves the retrieval recall and MRR over the BM25 only and DPR only retrieval methods. It delivers 0.7989, 0.8180, and 0.6508 on Top-5 Recall, Top-10 Recall, and Top-10 MRR respectively. Compared to the baseline results [6], this solution shows 3.6%, 2.7%, and 2.7% metric boost on Top-5 Recall, Top-10 Recall, and Top-10 MRR, respectively.

MethodTop-5 RecallTop-5 MRRTop-10 RecallTop-10 MRR

BM25 only (ours) | 0.7665 | 0.6310 | 0.8333 | 0.6402 |

DPR only (ours) | 0.6362 | 0.4997 | 0.7097 | 0.5094 |

Ensemble (ours) | 0.7989 | 0.6717 | 0.8452 | 0.6782 |

0.7633 | did not report | 0.8180 | 0.6508 |

Table 1: Retrieval performance on the entire indexed Dureader-vis Dataset

Get the Software

The document automation reference use case is an end-to-end reference solution for building an AI-augmented multi-modal semantic search system for document images. It significantly improves data scientists’ developer productivity by providing low-code or no-code ways to build document and image search solutions while demonstrating performance improvements. Please check our GitHub document-automation and try it for yourself.

We also encourage you to check out Intel’s other AI Tools and Framework optimizations and learn about the unified, open, standards-based oneAPI programming model that forms the foundation of Intel’s AI Software Portfolio.

References

[1] Tanaka, Ryota, Kyosuke Nishida, and Sen Yoshida. "Visualmrc: Machine reading comprehension on document images." Proceedings of the AAAI Conference on Artificial Intelligence. Vol. 35. No. 15. 2021.

[2] Karpukhin, Vladimir, et al. "Dense passage retrieval for open-domain question answering." arXiv preprint arXiv:2004.04906 (2020).

[3] Chi, Zewen, et al. "InfoXLM: An information-theoretic framework for cross-lingual language model pre-training." arXiv preprint arXiv:2007.07834 (2020).

[4] Okapi BM25

[6] Qi, Le, et al. "Dureadervis: A Chinese Dataset for Open-domain Document Visual Question Answering." Findings of the Association for Computational Linguistics: ACL 2022.

Product and Performance Information

System Configurations

Remember that indexing of the entire DuReader-vis [4] dataset can take days, depending on the type and the number of CPU nodes that you are using for the indexing pipeline. This reference use case provides a multi-node distributed indexing pipeline that accelerates the indexing process. It is recommended to use at least 2 nodes with the hardware specifications listed in the table below. A network file system (NFS) is needed for the distributed indexing pipeline. Tables 2 and 3 show the hardware and software configuration used for the performance evaluation. Tests were done by Intel in April 2023.

Name | Specification |

CPU Model | Intel® Xeon® Platinum 8352Y CPU @ 2.20GHz |

CPU(s) | 128 |

Memory Size | 512GB |

Network | MT27700 Family [ConnectX-4] |

Number of nodes | 2 |

Table 2. Hardware Configuration

Name | Description |

Framework /Toolkit incl version | PyTorch 2.0.0, FAISS 1.7.2, PostgreSQL 14.1, Elasticsearch 7.9.2, Ray 2.2.0 |

Framework URL | |

Topology or ML algorithm (include link) | |

Dataset (size, shape) | |

Pretrained Model | |

Precision (FP32, INT8., BF16) | FP32 |

COMMAND LINE USED | Docker Compose |

Training methodology | Fine-tuned from microsoft/infoxlm-base DPR model: batch size: 128; epoch: 3 |

Table 3. Software Configuration

Notices & Disclaimers

Performance varies by use, configuration, and other factors. Learn more at www.Intel.com/PerformanceIndex.

Performance results are based on testing as of dates shown in configurations and may not reflect all publicly available updates. See backup for configuration details. No product or component can be secure.

Your costs and results may vary.

Intel technologies may require enabled hardware, software, or service activation.

© Intel Corporation. Intel, the Intel logo, and other Intel marks are trademarks of Intel Corporation or its subsidiaries. Other names and brands may be claimed as the property of others.

Product Marketing Engineer bringing cutting edge AI/ML solutions and tools from Intel to developers.

Product Marketing Engineer bringing cutting edge AI/ML solutions and tools from Intel to developers.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.