Ramya Ravi, AI Software Marketing Engineer, Intel | LinkedIn

Large neural network models are the essence of many recent advances in AI and deep learning applications. Large language models (LLMs) belong to the class of deep learning models that can mimic human intelligence. These LLMs analyze large amounts of data by learning the patterns and connections between words, phrases, and sentences. This allows the models to generate new content.

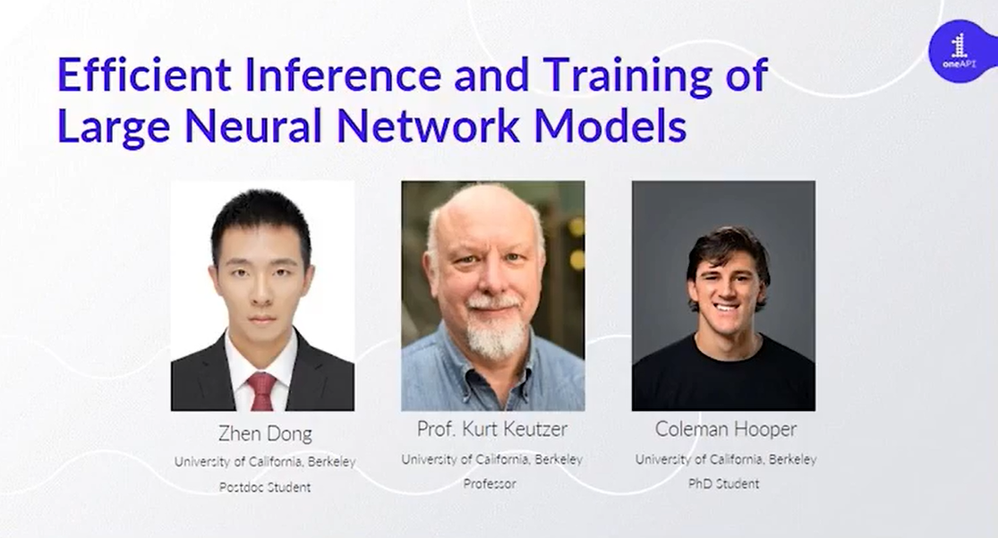

At the oneAPI DevSummit for AI 2023, Kurt Keutzer, Zhen Dong, and Coleman Hooper from UC Berkeley delivered a tech talk on Efficient Inference and Training of Large Neural Network Models.

In this tech talk, Professor Kurt started his presentation by introducing his research group’s focus on efficient inference at the edge and efficient training and inference in the cloud. His research group also produced their own branded family of neural networks called the “Squeeze” family.

Then, Coleman showed that the dominant contributor to inferring the large language models runtime is the time to load the parameters into the processor, not the time to compute. He explained that quantization is critical for efficient LLMs because it reduces memory footprint, peak memory requirements and improves inference latency. Also, he talked about the research group’s approach on efficiently quantizing these LLMs down to low precisions using,

- Sensitivity-Aware Non-Uniform Quantization

- Dense and Sparse Quantization

He outlined that Intel’s AI tools and frameworks helped their research by speeding up the sensitivity-based non-uniform quantization algorithm using parallelization.

Finally, Zhen pointed out that for large language models such as Deep Learning Recommendation Models (DLRM), their research group proposed Deep Quantized Recommendation Models (DQRM) to reduce overfitting and the cost of communications during training on distributed systems. Then, he also explained about gradient sparsification and quantization.

Watch the full video recording here and download the presentation.

What’s Next?

Get started with LLMs and check out another tech talk on how Intel® Distribution of OpenVINO™ toolkit helps accelerate the end-to-end process of Generative AI building, optimization, and deployment.

We encourage you to also check out and incorporate Intel’s other AI/ML Framework optimizations and end-to-end portfolio of tools into your AI workflow and learn about the unified, open, standards-based oneAPI programming model that forms the foundation of Intel’s AI Software Portfolio to help you prepare, build, deploy, and scale your AI solutions.

For more details about 4th Gen Intel® Xeon® Scalable processors, visit AI Platform to learn how Intel empowers developers to run end-to-end AI pipelines on these powerful CPUs.

About the Speakers

Kurt Keutzer, University of California, Berkeley

Kurt Keutzer is a Professor at the University of California, Berkeley. He is a member of the Berkeley AI Research (BAIR) Lab and co-director of the Berkeley Deep Drive research consortium. His “Squeeze” family of DNNs were among the first DNNs suitable for mobile applications.

Zhen Dong, University of California, Berkeley

Zhen Dong finished his bachelor's from Peking University in 2018 and his Ph.D. from the University of California at Berkeley in 2022. He is currently a postdoc at UC Berkeley working with Prof. Kurt Keutzer.

Coleman Hooper, University of California, Berkeley

Coleman is a graduate student at UC Berkeley, CA, USA, pursuing a PhD in Electrical Engineering, affiliated with the Specialized Computing Ecosystems (SLICE) lab and with BAIR. His research interests are hardware acceleration and hardware-software co-design for machine learning, with a particular focus on NLP applications.

Useful Resources

Product Marketing Engineer bringing cutting edge AI/ML solutions and tools from Intel to developers.

Product Marketing Engineer bringing cutting edge AI/ML solutions and tools from Intel to developers.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.