Microsoft Azure Cognitive Services enable applications to consume AI capabilities via APIs and SDK (Reference 1). These can be a viewed as an “AI Inferencing as a Service” for consuming “ready-made” AI capabilities in particular areas of AI vision, speech, language, and decision. The services implement AI algorithms, pre-trained models in particular domain areas for application developers to leverage. Organizations need not build AI and data science domain expertise. Instead, application developers can focus on business logic and leverage these services where AI capabilities are needed. For example, under Vision Cognitive Services the Read API provides AI algorithms for extracting text from images and returning it as structured strings. Applications can pass an image to this service via API call and get structured text in return. Gartner refers to this category of AI market as “Cloud AI Developer Services” and the associated vendor landscape is available via their Magic Quadrant (Reference 3).

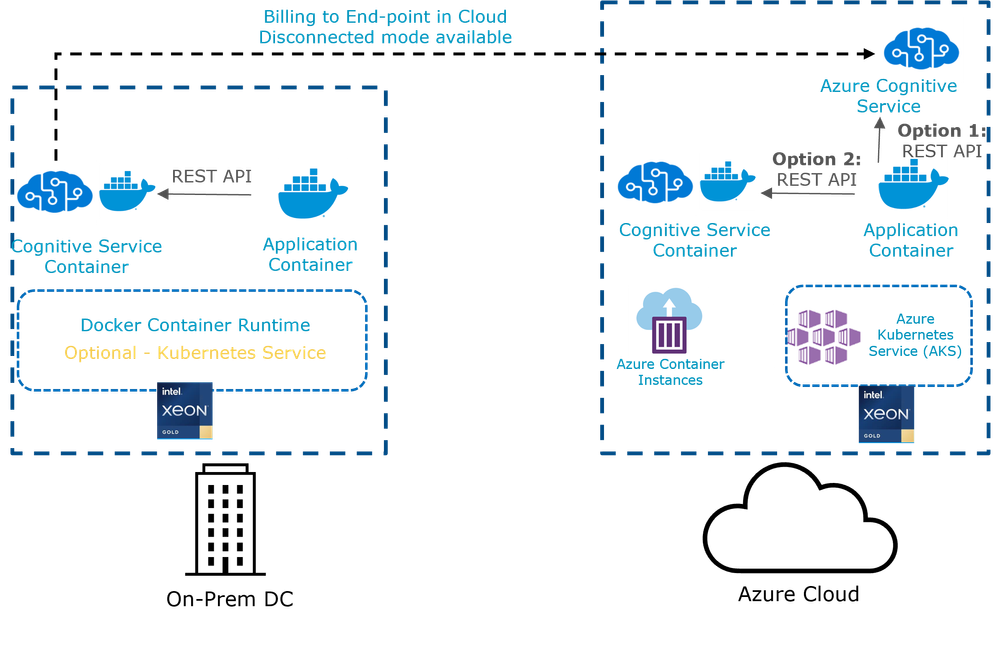

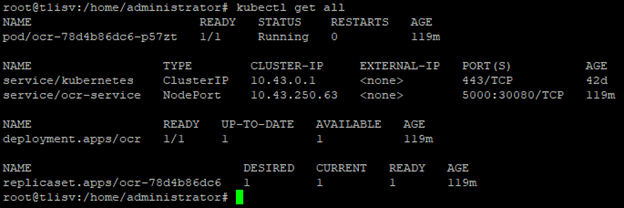

Client SDK libraries in different programming languages are available to consume Cognitive Services in an application or via REST API using standard HTTP. These set of services are available in Azure cloud as API calls for cloud applications to consume AI skills directly. Azure cognitive services containers (Reference 2) provide the same services on-premises that are available in the cloud via downloadable Docker based container images as in Figure 1. It must be noted that the set of cognitive services available on-premises as containers is a subset of what is available in Azure cloud. The containers need to be connected to Azure cloud for billing and metering the API usage via an Azure public cloud subscription account. The containers can also be run in Azure cloud hosted on Azure Container Services or Azure Kubernetes Services instead of using the cloud service as shown in Figure 1. Pricing for the containers is based on a consumption model of the API calls (pay as you go for the transactions). Certain containers are supported to be run on-premises but dis-connected with public cloud. For disconnected containers, pricing is based on a commitment plan (fixed fee). It must be noted that certain containers such as Vision and Speech require Microsoft approval for on-premise use. Language and decision containers can be used as-is with Azure cloud subscription.

Figure 1: Azure Cognitive Services Overview

This article covers a proof of concept (PoC) to deploy Azure Cognitive Services containers on-premises with Intel Xeon platform and a demo of Inference applications consuming the services via API call.

1 PROOF OF CONCEPT (POC) CONFIGURATION

As a proof-of-concept, a Kubernetes deployment was set up at one of the Intel on-site lab locations. A dual socket server configured with 2 x Intel(R) Xeon(R) Gold 6348 CPUs (3rd generation Xeon scalable processors, code name Ice Lake) was set up with single node Rancher Kubernetes implementation (Reference 4). A Kubernetes workload cluster was set up on the single server node. For on premises production Kubernetes deployment, this could be multi-node SUSE Rancher implementation, Azure Kubernetes Service deployed on Azure Stack HCI, VMware Tanzu on VMware vSphere or Red Hat OpenShift or other supported Kubernetes platforms. The on-premises production deployment could also be just Docker based implementation without Kubernetes orchestration and management.

The PoC configuration used for demonstration is shown in Figure 2 below.

2 COGNITIVE SERVICES CONTAINER DEPLOYMENT

2.1 SENTIMENT ANALYSIS

Sentiment Analysis container part of Azure Language Service was chosen for deployment (Reference 5). The sentiment analysis service enables analyzing text to determine whether the sentiment exuded in the text is positive or negative. For example, Enterprises can use this capability to analyze customer reviews of products posted on websites or in social media. An application developed can make use of this service by making API calls into this service with text as input and the sentiment attributes as output.

A Language resource named “azcoglang” was created under an Azure cloud subscription. The resource was hosted under a resource group named “azcog” as shown in Figure 3 below.

Figure 3: Azure Language Service

The sentiment analysis cognitive service container was deployed using a Helm chart on the local single node Kubernetes installation. The Helm chart for deployment was based on a Microsoft sample posted online (Reference 6). A Helm chart was constructed just to deploy the sentiment analysis container with no other applications. The helm chart details are provided in appendix. The docker image for the sentiment analysis container (English language version) was pulled from Microsoft container registry location posted below.

mcr.microsoft.com/azure-cognitive-services/textanalytics/sentiment:3.0-en

Command line variables were passed to container on launch to provide the Azure billing end point, API access key, EULA acceptance and the HTTP proxy URL used by the Kubernetes node for outbound internet communication.

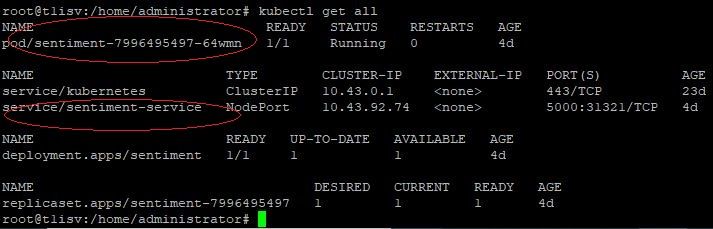

The as-deployed state of the container as a pod in Kubernetes is showing in Figure 4 below.

Figure 4: Kubernetes Deployment

Note that the pod was deployed in Kubernetes with NodePort service type for accessing the service outside the Kubernetes cluster using the IP address of the single node. The sentiment analysis service API will be accessed on TCP port 5000 internal to the Kubernetes cluster. Externally it is mapped to a different port on the node (i.e. 31321) for outside applications to access the API service.

2.1.1 Deployment Verification

The container state can be verified by querying the service API from a client machine outside the Kubernetes cluster by using the Kubernetes node IP.

The output of web browser calls to "http://nodeIP:nodePORT/ready" and "http://nodeIP:nodePORT/status" is listed below:

{"service":"sentimentv3","ready":"ready"}

{"service":"sentimentv3","apiStatus":"Valid","apiStatusMessage":"Api Key is valid, no action needed."}

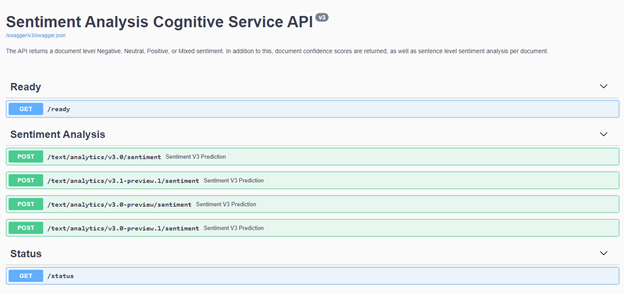

The REST API calls available from the container service can be verified via browser call to "http://nodeIP:nodePORT/swagger". The output is shown in figure below.

Figure 5: Sentiment Analysis API

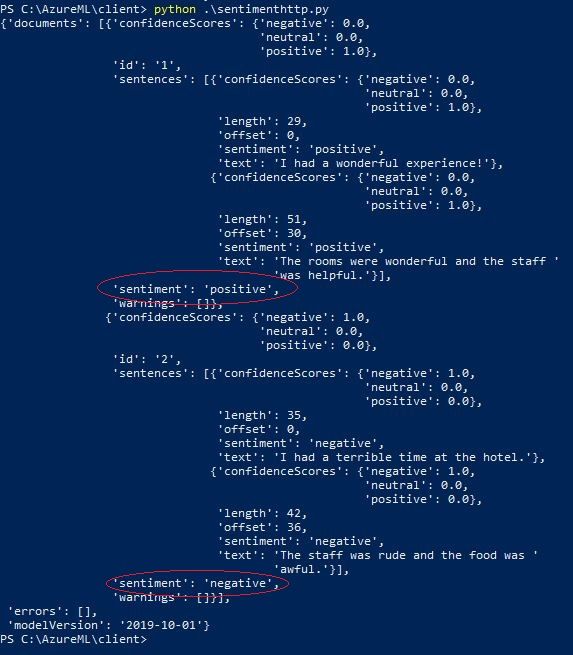

An inference application script was run to exercise the POST REST API call to submit a list of text documents for sentiment analysis. The sample script used for this is available online (Reference 7).

The script submitted the following text items as input:

{'documents':

[

{'id': '1', 'language': 'en', 'text': 'I had a wonderful experience! The rooms were wonderful and the staff was helpful.'},

{'id': '2', 'language': 'en', 'text': 'I had a terrible time at the hotel. The staff was rude and the food was awful.'},

]

}

The output of the sentiment analysis API is shown below in Figure 6.

Figure 6: Inference Output

The sentiment analysis service running the container predicted that the first text item has positive sentiment and the second item has negative sentiment with associated confidence scores.

2.2 READ OCR

Read OCR (Optical Character Recognition) container part of Azure Computer Vision was chosen for deployment (Reference 8). The Read OCR service enables extraction of printed and handwritten text from images and documents with support for JPEG, PNG, BMP, PDF, and TIFF file formats. For example, Enterprises can use this capability to convert hand-written text in digital images to character string text documents. An application developed can make use of this service by making API calls with image documents as input and obtaining converted string text characters as output. The read OCR container requires the host computer CPU to support AVX2 (Advanced Vector Extensions), which is supported on the Intel processor used in the PoC.

A Computer Vision resource named “azcogvision” was created under the Azure cloud subscription. The resource was hosted under a resource group named “azcog” similar to Figure 3. The Read OCR cognitive service container was deployed using a Helm chart on the local single node Kubernetes installation. The Helm chart for deployment was based on the same Microsoft sample posted online (Reference 6). A Helm chart was constructed just to deploy the Read OCR container with no other applications. The helm chart details are similar to the one provided in appendix for Sentiment Analysis. The docker image for the Read OCR container was pulled from Microsoft container registry location posted below.

mcr.microsoft.com/azure-cognitive-services/vision/read:3.2-model-2021-04-12

Command line variables were passed to container on launch to provide the Azure billing end point, API access key, EULA acceptance and the HTTP proxy URL used by the Kubernetes node for outbound internet communication.

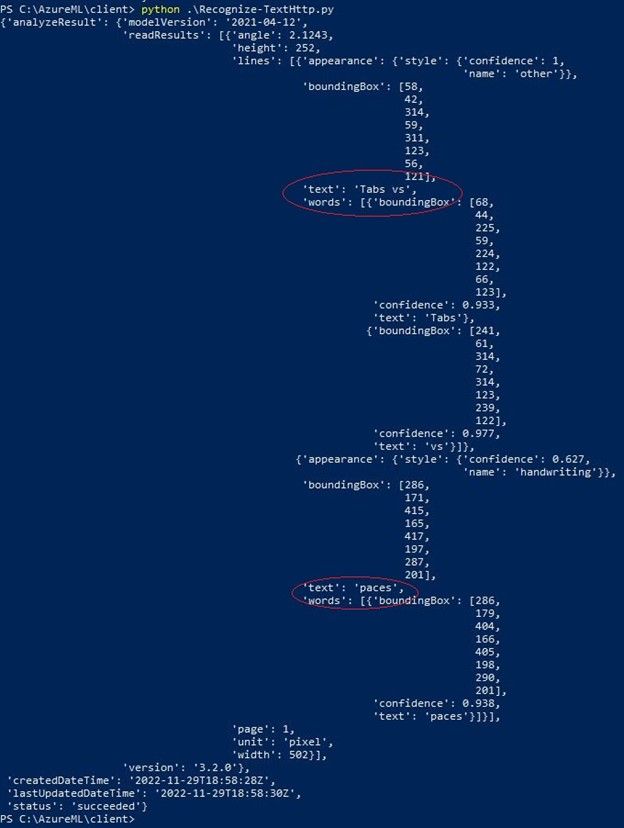

The as-deployed state of the container as a pod in Kubernetes is showing in Figure 7 below.

Figure 7: Kubernetes Deployment

Note that the pod was deployed in Kubernetes with NodePort service type for accessing the service outside the Kubernetes cluster using the IP address of the single node. The Read OCR service API will be accessed on TCP port 5000 internal to the Kubernetes cluster. Externally it is mapped to a different port on the node (i.e. 30080) for outside applications to access the API service.

2.2.1 Deployment Verification

The container state can be verified by the querying service API from a client machine outside the Kubernetes cluster by using the Kubernetes node IP.

The output of web browser calls to " http://nodeIP:nodePORT/ready " and " http://nodeIP:nodePORT/status " enables verifying the status. The REST API calls available from the container service can be verified via browser call to " http://nodeIP:nodePORT/swagger ".

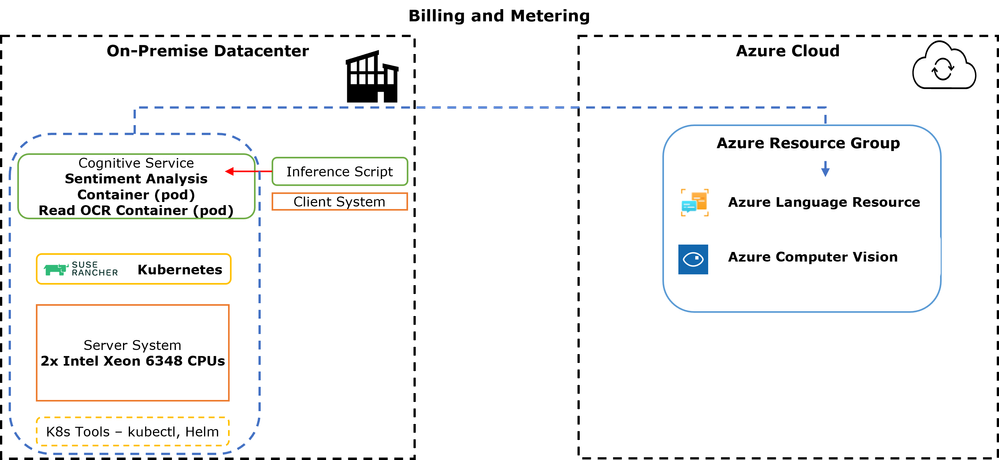

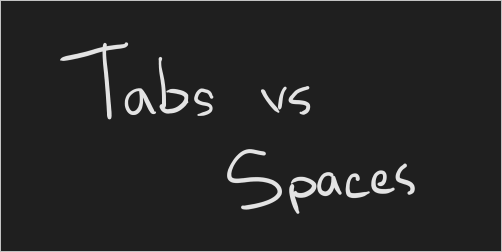

An inference application script was run to exercise the POST REST API call “syncAnalyze” to submit an image document for sentiment analysis. The sample script used for this is available online (Reference 7).

The script submitted the following image as input to the container API URI -

"-http://nodeIP:nodePORT/vision/v3.2/read/syncAnalyze-"

Source: https://learn.microsoft.com/en-us/azure/cognitive-services/computer-vision/media/tabs-vs-spaces.png

The output of the Read OCR API call is shown below in Figure 9.

Figure 9: Inference Output

The Read OCR service running in the container predicted the text items in the image with associated confidence scores.

3 SUMMARY

In this article we demonstrated Azure cognitive services container inference deployment with on-premises Kubernetes infrastructure. The PoC used 3rd Generation Intel Xeon processors to demonstrate the solution. These processors include support for Advanced Vectors Extensions 2 (AVX2) which is required for certain cognitive services containers such as Read OCR in Computer Vision - https://www.intel.com/content/www/us/en/develop/documentation/cpp-compiler-developer-guide-and-reference/top/compiler-reference/intrinsics/intrinsics-for-avx2.html. Intel Xeon processor based platforms are supported with a variety of Enterprise commercial grade Kubernetes software platforms. Also, Intel includes several optimizations and features for the cloud native Kubernetes eco-system - https://www.intel.com/content/www/us/en/developer/topic-technology/open/cloud-native/overview.html.

4 APPENDIX

4.1 HELM CHART DETAILS

4.1.1 DEPLOYMENT.YAML

apiVersion: apps/v1

kind: Deployment

metadata:

name: sentiment

labels:

app: sentiment-deployment

spec:

selector:

matchLabels:

app: sentiment-app

template:

metadata:

labels:

app: sentiment-app

spec:

containers:

- name: {{.Values.sentiment.image.name}}

image: {{.Values.sentiment.image.registry}}{{.Values.sentiment.image.repository}}

ports:

- containerPort: 5000

env:

- name: EULA

value: {{.Values.sentiment.image.args.eula}}

- name: billing

value: {{.Values.sentiment.image.args.billing}}

- name: apikey

value: {{.Values.sentiment.image.args.apikey}}

- name: HTTP_PROXY

value: {{.Values.sentiment.image.args.http_proxy}}

resources:

requests:

memory: "8Gi"

cpu: "1"

limits:

memory: "8Gi"

cpu: "1"

---

apiVersion: v1

kind: Service

metadata:

name: sentiment-service

spec:

type: NodePort

ports:

- port: 5000

selector:

app: sentiment-app

4.1.2 VALUES.YAML

# These settings are deployment specific and users can provide customizations

sentiment:

enabled: true

image:

name: cs-sentiment

registry: mcr.microsoft.com/

repository: azure-cognitive-services/textanalytics/sentiment:3.0-en

args:

eula: accept

billing: https://azcoglang.cognitiveservices.azure.com/

apikey: xxxxxxxxxxxxxxxxxxxxxxxx

http_proxy: http://xxxx.com:xxx

5 REFERENCES

1. https://learn.microsoft.com/en-us/azure/cognitive-services/what-are-cognitive-services

2. https://learn.microsoft.com/en-us/azure/cognitive-services/cognitive-services-container-support

7. https://github.com/Azure-Samples/cognitive-services-containers-samples/tree/master/python/Sentiment

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.