- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

#==========OTHERWISE 3D is Enabled =======================================

#-------------------------------------------------------------

_, euler_angle = head_pose.get_head_pose(face_landmarks_list)

#-------------------------------------------------------------

pitch = euler_angle[0] # in degrees

pitch_cb.append(math.radians(pitch))

state.pitch = pitch_cb.average

yaw = euler_angle[1]

yaw_cb.append(math.radians(yaw) )

state.yaw = yaw_cb.average

roll = euler_angle[2]

print("pitch = {}; yaw = {}".format(pitch, yaw) )

#-------------------------------------------------------

v, t = rs_camera.get_vertices_and_texture_coordinates()

#-------------------------------------------------------

# print("v.shape is {}".format(v.shape) )

# print("v[0] = {}".format(v[0]) )

i = 0

for height in range (top, bottom) :

for width in range (left, right) :

ix = height * rs_camera.w + width

#oooooooooooooooooooooo

v[i] = v[ix]

t[i] = t[ix]

#oooooooooooooooooooooo

#nnnnnnnnnnnnnnnnnnnnnn

# v2[i] = v[ix]

# t2[i] = t[ix]

#nnnnnnnnnnnnnnnnnnnnnn

i += 1

state.verts = v.view(np.float32).reshape(-1, 3) # xyz

state.texcoords = t.view(np.float32).reshape(-1, 2) # uv

outimage.fill(0)

#-------------------------------------------------

state.pointcloud( face_image_roi, painter=False)

#-------------------------------------------------

# print("shape of outimage : {}".format(outimage.shape) )

aligned_im_face = outimage[0:360, 0:640]

aligned_ir_face = outimage[0:360, 0:640]

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I recall an example of someone doing a similar project successfully using the PCL (Point Cloud Library) software, OpenCV and a Kinect camera. The description of the YouTube video linked to below says that they used OpenCV's Haar Cascade Classifier.

https://www.youtube.com/watch?v=4fJCAQZpvKk

Many other face detection projects that use the Haar Cascade can be found on YouTube with the search term 'opencv face recognition haar cascade'.

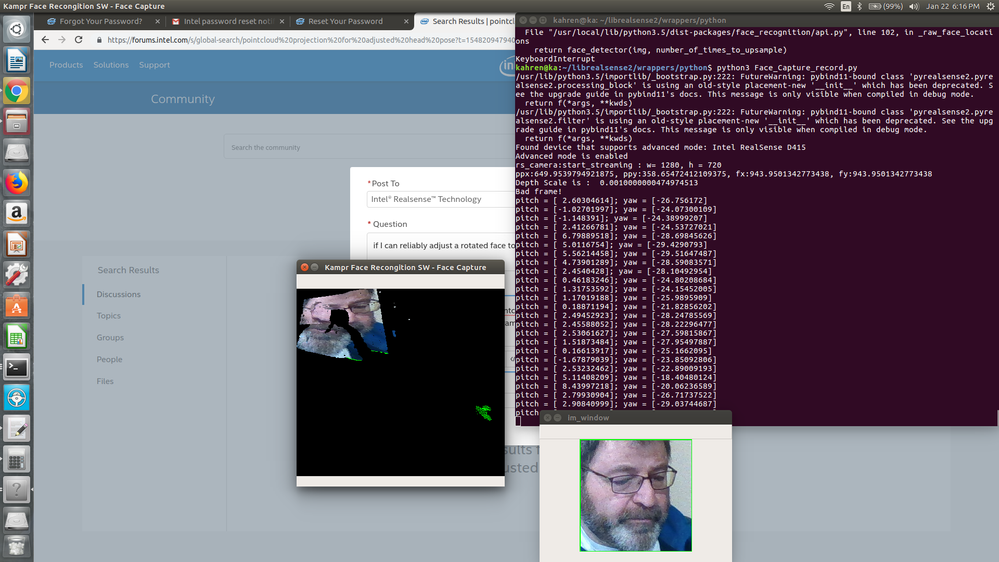

Regarding your current project with the modification of openCV_pointcloud_viewer ... looking at the point cloud produced in your image, I wonder if the problem is related to the distance of your face from the camera. RealSense cameras have a minimum depth sensing distance (MinZ). If something is close enough to the camera that it goes under this minimum distance, the image starts breaking up.

The default minimum distances for the 400 Series cameras is 0.3 m for the D415 and 0.2 m for the D435.

It is possible to reduce the minimum distance to allow good detection at closer range by either using a lower resolution on the camera stream, or by adjusting the 'Disparity Shift' value (which allows a smaller minimum distance at the cost of the maximum sensing distance MaxZ being reduced).

An easy way to tell whether minimum distance is the cause of your problem would be to simply move back from the camera a little and see if the broken black areas of the image clear up into a good detection.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

MartyG Thank you for your quick response and the Youtube link... I am looking for something similar which led me to openCV_pointcloud_viewer sample app...

So my application needs faces far from the camera ... Generally, 1 meter away is the sweetspot... the openCV_pointcloud_viewer.py works well enough standalone but it leaves the view adjustment to the user via mouse and I am trying to get that through detecting head pose and feed it back programatically... the other differences are: it calls decimate which reduces the resolution quit a bit and it does not align color to depth frame... should I be calling decimate and not do alignment?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

If precision is important, my personal view is that the Decimation filter should be regarded as a back-up option if it is not possible to implement Align, as Align should be more precise.

Alignment is a bit harder with point clouds though than it is with 2D data. This is because you need to take the extrinsics of the camera into account in order to get accurate results. The RealSense Viewer has the ability to do color and depth alignment of point clouds built in. Otherwise, you have to write the align code yourself for your own application.

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page